#OpenData and Disaster Management

/ As I reflect on the past month in my Government 3.0 class with Beth Noveck, I am reminded of how much I am learning and how much it relates to my current and future work in Disaster Management. Overall, this class is an opportunity to learn about concurrent and ongoing initiatives that are furthering the goals of government and its constituents. But more importantly, the class has allowed me to translate the many things going on government wide to the unique challenges of Disaster Management.

As I reflect on the past month in my Government 3.0 class with Beth Noveck, I am reminded of how much I am learning and how much it relates to my current and future work in Disaster Management. Overall, this class is an opportunity to learn about concurrent and ongoing initiatives that are furthering the goals of government and its constituents. But more importantly, the class has allowed me to translate the many things going on government wide to the unique challenges of Disaster Management.The Unique Challenges of Disaster Management

Scalability. We plan and prepare for events the best we can by building response capacity and training our staff, volunteers, and partners to respond appropriately; but at the end of the day when a disaster strikes, we need more resources that help us deal with the volumes of information and needs that are coming from every direction. In addition, the more complex the incident, the more important effective coordination is as more and more response partners join the response.

The more data that is easily available in machine readable format, the more we can innovate with better applications that allow us to slice and dice data to turn it into actionable information for decision-making at all levels and parts of the response. As a result, we as the collective response can make more informed real-time decisions that begin to really do the greatest good for the greatest number. The more people (citizens included) with access to the right information at the right time can help alleviate the response burdens of governments and non-profits during major disasters.

High Impact/Low Frequency. Many industries benefit from the mounds of data they sit on for consistent and repeatable events and processes. As a result, they are better able to learn and adapt to better manage risk. However, we are often planning for sentinel events that we may or may not know will occur and for which we often don't know the exact impacts. As a result, we make many planning assumptions based on a combination of scientific evidence, experience and pure conjecture. We need better "response data" to validate our assumptions and activities. What is it about our response that really worked well? How come?

We work hard to complete after action reports that detail lessons learned and document best practices. LLIS.gov contains a lot of these reports that are accessible to other industry professionals. But who has the time to sift through everything? What if you could slice and dice all the information from every report to help answer your specific questions? What what if we could index all of the data and information contained in these documents to identify national trends? Our ability to learn from our past would skyrocket.

Open data is not just about the ability to view it, it is about the ability to mash it together to gain new insights that were previously undetectable.

However, our existing evidence-base is largely anecdotal and based on subject matter expertise only. Ongoing research is changing our understanding of management and coordination, but decisions are still made this way based on anecdotal evident and expertise. So what if we could add meaningful metrics and data to the equation? Essentially, open data would help us review not what worked and what didn't, but the degree to which it worked or didn't work. Data can come from internal systems or even external systems. The more "open" and available it is, the better chance we have to collect meaningful data that helps us learn from our past.

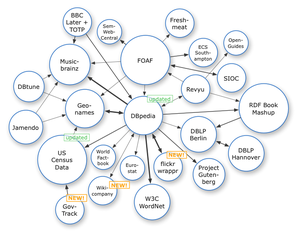

Interdependencies. If we could everything alone, we wouldn't be facing many of the challenges we face today. However, we need to balance the anticipated risk with our capabilities in a fiscally responsible, yet politically acceptable manner. So we turn to our neighbors, non-profits, private sector organizations and other government agencies to become force multipliers. This creates significant coordination and management challenges as the list grows and grows. We need more data about what these relationships look like.

Data allows us to actually map interdependencies that could potentially result in catastrophic failures during an event. We can also better identify and prioritize these interdependencies to help improve system resielence. After all, regardless of where or how we operate, we ALWAYS operate in some sort of system that has to work together in order to perform well. MindAlliance has a great tool that helps organizations identify gaps and weakness is their preparedness. With additional data, we can further validate planning assumptions in relationship to our dependencies to help distribute risk as best as possible.

Government Led, Community Reliant. Building off the issues with interdependencies, we also have a mismatch occurring in that initiatives are often government led, but effective responses to disasters are extraordinarily community reliant. President Obama and FEMA have picked up on this issue with their "Whole Community" approach outlined in PPD-8. But in moving forward, do we have the resources needed for a true "Whole Community" approach? In the past, many great initiatives have simply failed due to a lack of funding or time (or the next disaster).

Government Led, Community Reliant. Building off the issues with interdependencies, we also have a mismatch occurring in that initiatives are often government led, but effective responses to disasters are extraordinarily community reliant. President Obama and FEMA have picked up on this issue with their "Whole Community" approach outlined in PPD-8. But in moving forward, do we have the resources needed for a true "Whole Community" approach? In the past, many great initiatives have simply failed due to a lack of funding or time (or the next disaster).

Data will help us prioritize our efforts for maximum effectiveness. And if the whole community is to participate, they need to data in which to help. The more data we can work with, we can turn planning and interdependency "assumptions" into "factual" planning points that leave us better prepared for the impacts we actually face, not just what we "think" we face. Essentially, open data can really help us perform better risk and gap analyses to better inform our mitigation, preparedness, response and recovery efforts.

"Zero Fail." This term has been thrown around a lot in the industry. Most often, it is used in the context of fear for trying something new. Essentially, we need to do what we know works in order to not "fail." Hurricanes Katrina, Ike, Gustav, Irene, and Sandy are quickly showing how inadequate this assumption is. Our missions are extraordinarily important, but failures are how we learn best. I would take this a step further and argue that mini-failures are likely the best way of learning. Eric Reis's book The Lean Startup, provides some great anecdotes for failing fast, but still accomplishing the mission.

Developing an "experimentation" culture based on real-time and meaningful data is essential. Data will helps us fail fast, while still helping us accomplish our mission. As a result, we can better identify ways to mitigate failure in the future and maximize the effectiveness of our response. We are always hailed as a "dynamic" industry; but what if we could be even more dynamic to fail and react in the moment? What if we could change course easily? Open data enables these things. Granted, some of this may be a few years off, but it highlights what is possible, even more so than we might think!

Overall, #OpenData represents one of the most important ways we can learn and advance the industry better when dealing with the unique challenges of the industry.

What do you think? Have you had any wins with OpenData at your organization or in your community? What have you been able to build with open data?

Related articles

- OASIS : Open Advanced System for dIsaster and emergency management (cordis.europa.eu)